Introduction

In the previous posts on Microsoft SQL server I’ve shown how to create a basic SSIS package to transfer data from an Excel file to a data base, iterate through multiple sheets in an Excel file, using XML configuration files and expression etc. In this post I’ll try to give a basic understanding of how to use Lookup Transformations to extract data required to be entered in to a data table from a related table using data common to both tables. I will also be also be going in to detail about difficulties faced when handling Lookup no match scenarios in SSIS due to certain design constraints and a work around that can be used.

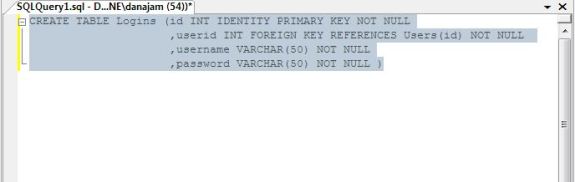

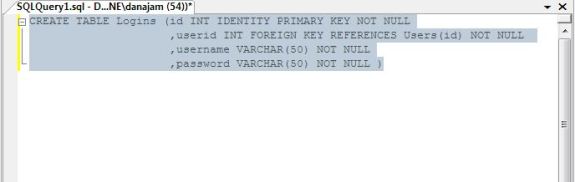

In this post I’ll be using two SQL server database tables. One is the User table I’ve used in all my previous posts and the other is a new Logins table. The Logins table contains the passwords and usernames of the users in the User table. Thus the Login table refers to the relevant user by the user id mentioned in the User file. The user id is included in the Logins table as a foreign key referring to the User table. I created the Logins table using the following query.

Query to create the logins table

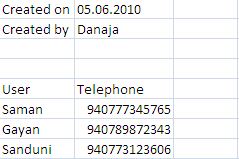

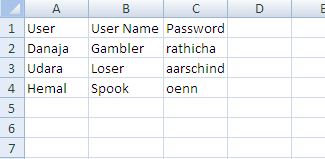

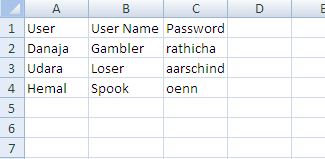

Also I’ve created a Excel file containing the three columns User, Username and Password with 3 entries in two sheets.

sample input in Logins File

Step 1:

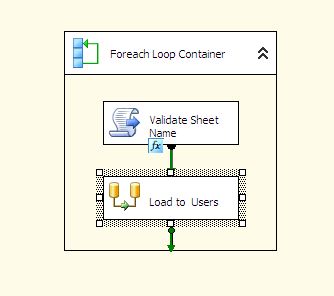

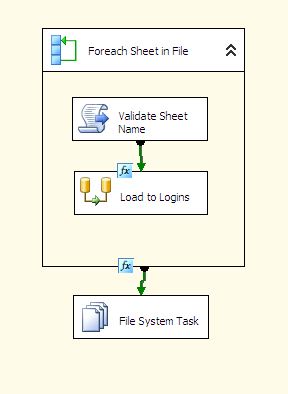

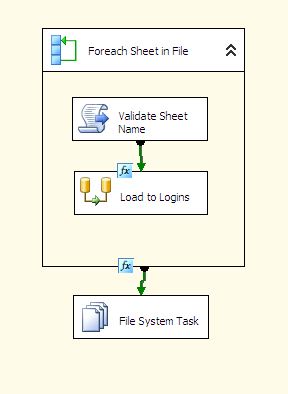

The first few steps of creating a SSIS package that can iterate through the Logins Excel file can be done based on the following post. Once done the created package will look something like this.

the Control Flow Design after step 1

The data flow task will contain will contain an Excel source component that uses the iterating sheet variable and a data conversion component to convert data in to a type compatible with the database table data types in sequence.

Step 2:

Now move to the data flow design area and drag and drop a look up component and connect the data conversion component to it.

Data flow design after adding a Look up component

We’ll use this component to match the names from the User column in our Logins Excel file to the Name field in the Users data base table and extract the id which will be stored in the userid field of the Logins table.

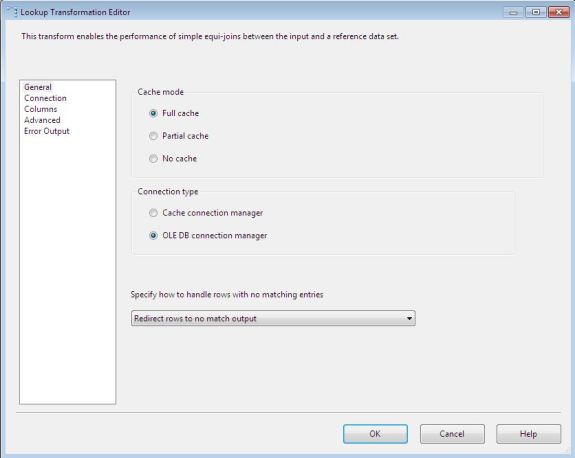

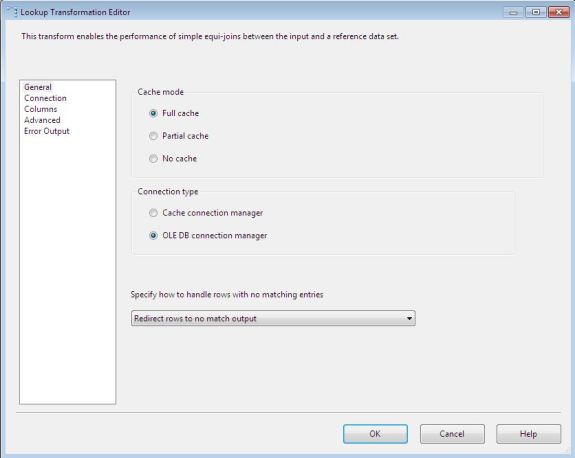

Now right click the Look up component and select Edit. Under the general settings you should select the “Redirect rows to no match output” so that you can separately deal with data rows from the Logins table that have no corresponding entries in the Users table. By default this is set to fail the component when there are no match rows.

General settings of the Look up component

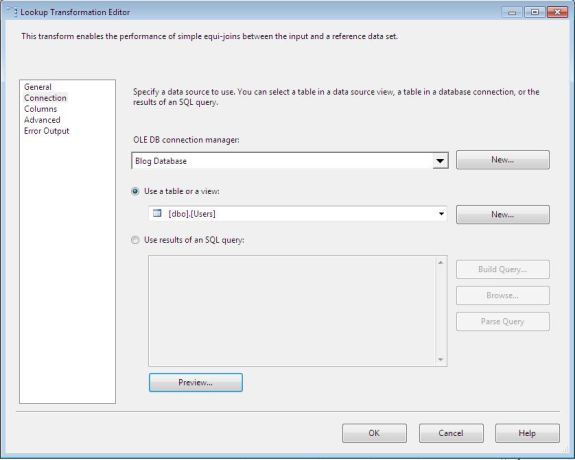

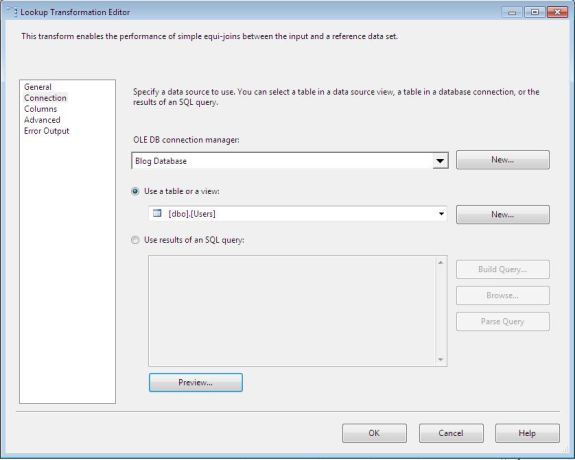

Next from the connection settings we need to set the database and table we’ll be connecting to in performing the look up which in this case is the Users table.

Setting the look up table

You can test whether the the connection is successful by previewing the contents of the Users table by using the preview button.

Note: you can perform lookups on the result sets of custom SQL queries as well. The query can be inserted area seen to be inactive in the above image. However during the development carried out thus far during my internship it has been considered good practice to write the actual query as a stored procedure and to call the SP from the lookup component.

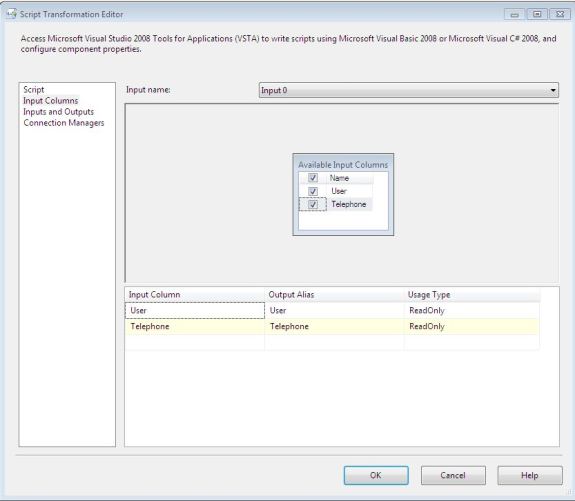

Next in the column settings we can provide the field that need to be matched from the Excel file and the Users table and also specify the field to be extracted and added to the data flow from the table on matching. As you can see from the image below the converted user field from the Excel file has been matched with the name field in the User table and the id field is added to the data flow.

Performing the Look up

Step 3:

Once the look up component is properly set up drag and drop a slowly changing dimension component from transformations and connect the Look up component to id. In the settings give the Logins table as the destination table and match the columns from the data flow to the relevant fields in the table.Also set the id field to be a business key, the username field as a fixed attribute and the password field as a changing attribute. If you are new to the slowly changing dimension component look in my post on creating a basic SSIS package.

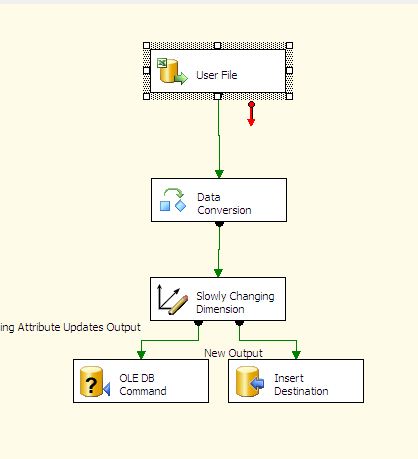

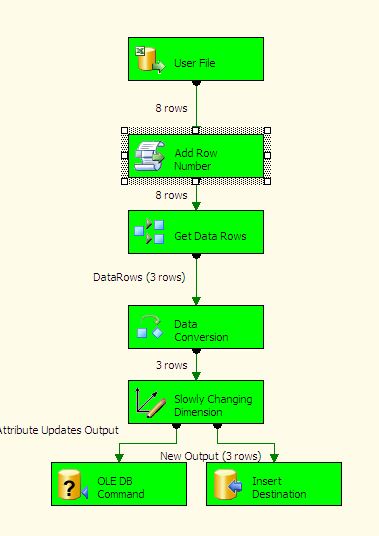

At the end of this step the data flow design of the package will look something like this.

Data flow design with a slowly changing dimension added

Now the package can be run and given that I added entries in Login Excel file that match already available entries in the Users table there won’t be any errors and the new entries will be entered in to the Login table.

But what if there was a entry in the file that had no corresponding entry in the Users table?. Then that entry would have been directed to the no match output of the Look up component as we specified. As that output is not yet utilized nothing will be done about no match rows.

As I have experienced in the last few months developing ETLs it’s normal practice do dump these rows in to a text file and insert a record in to a error log. Lets try doing the first part.

Step 4:

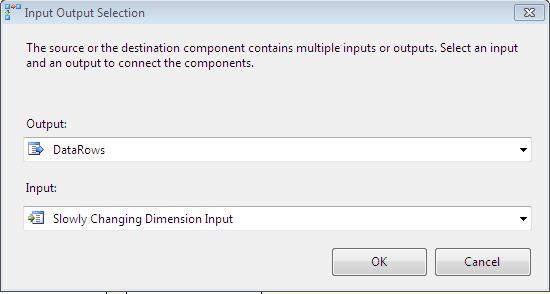

Drag and drop a flat file destination from the tool box in to the design flow area. Drag and drop the green arrow on the Look up component on to the flat file destination component. From the pop up options on which data stream to be transfered select the “No match rows”. This would send any entries that have no matching User table entries to the text file we are going to create.

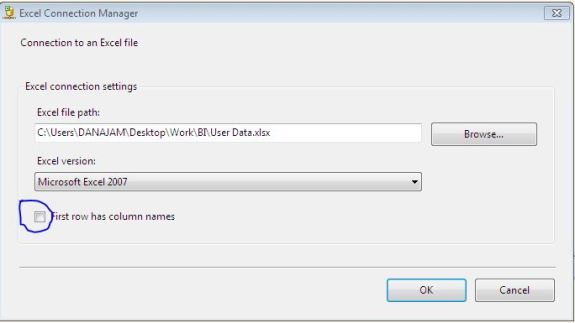

Next we can configure the flat file destination as follows. In the edit screen create a new connection manager by clicking new. For the moment give the location of any text file in your machine as the path in the connection manager and set the other properties as shown below by the images.

Configuring the flat file destination 1

Configuring the flat file destination 2

Now back at the flat file destination edit screen move to the column section and set the order in which the fields from the data flow will appear in the text file.

Configuring the flat file destination 3

You can give the actual path of the file that needs to be created as an expression to the connectionString property of the flat file connection manager that will be now created by creating a package variable that contains the path.The flat file destination has now been configured and the package can be run again . Note: You should remove the entries inserted in to the database by previous runs of the package before execution.

It is here that we come across the first the first problem. Notice the circled area in the image below.

post execution view of the data flow

I have executed the package with the same set of data as before which doesn’t have any no match rows. This means that there would be no rows in the no match output of the Lookup User component. I originally expected that without input the flat file destination component would simply not execute. But instead it did and just created an empty file. Imagine A set of ETL packages having multiple Lookups each dumping no match rows in to text files. Even if there are no no match rows using the above approach these ETL’s would generate dozens of empty files.

So lets take a misguided swipe at the problem and see how I came to the next larger issue.

Step 5:

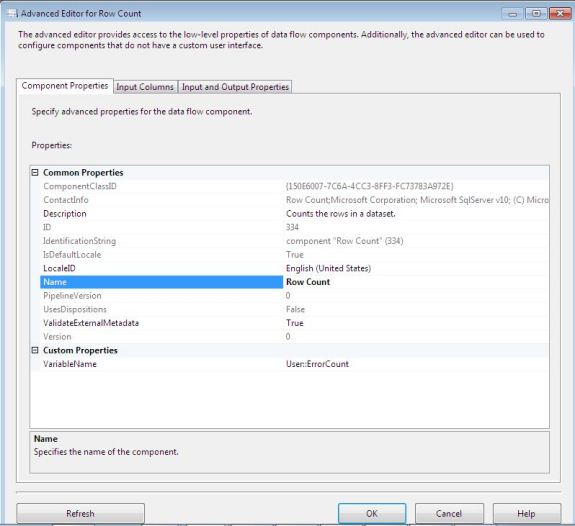

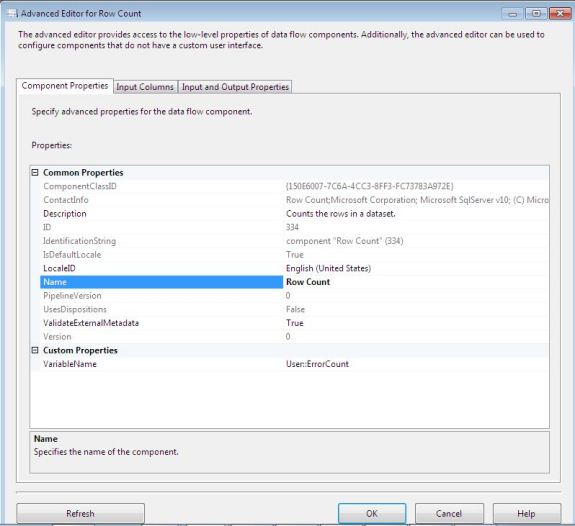

Create a integer variable “ErrorCount” with the scope set to the package(Note I tried this with the scope set to just the Data flow and the result was identical). Now drag and drop a RowCount component on to the design area and connect it the Look up component after disconnecting the lookup from the flat file destination. Move to the edit screen of the component and provide the Error Count variable we created as the variable to store the number of rows of no match rows.

Capturing the no match row count

Then add a Conditional split component from the tool box and connect the row count component to it. In the edit screen we can give a condition which splits the data flow based on whether there are actually any no match data rows or not. At least thats the idea 😉

This can be done as shown in the image below.

Splitting the data flow based on no match rows

Now the Conditional split component can be connected to the flat file destination and the package can be executed. The expectation here is that the adjustment will result in the flat file destination component being executed only when there are actually some no match rows.

However the flat file destination component still executes even with this approach. This is because at the data flow all components will be executed based on the logical procession. If you want to control the execution that has to be done at the control flow level.

Also we can’t use a script component to to simply check the condition using C# or VB.NET and create the error file or not using .NET methods because the variable Error Count will not actually have the value of rows set at the time we check it.

This is due to the fact that a change in the value of a package variable of any scope within a data flow task will actually be reflected in that variable only after the flow exits that components. In other word s a change in a package variable within a data flow task can’t be used within the same task.

Step 6:

So those are the problems and this step shows my solution to it.

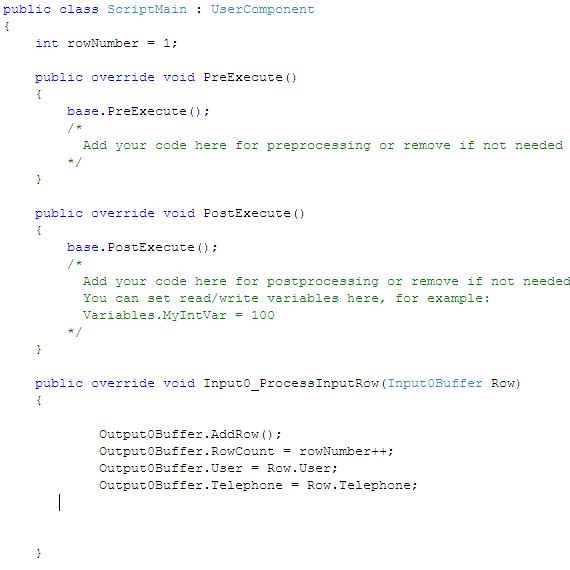

Remove the row count and conditional split components we added in step 5 and instead add a script component.Connect the component to the lookup User component and provide the ErrorCount as a

read and write variable to the script component. In the script editor of the component add the following code.

Capturing the total number of no match rows

This code simply creates a local integer variable which increments for each no match row during a single execution of the script component and in the post execute method the total per execution is added to the ErrorCount variable. The reason += is used in setting the Error count is because in multiple Excel sheet scenarios if sheets with no match rows are followed by ones without the use of equal sing only will may give zero as the error count which would be incorrect.

Now connect this component to the flat file destination. The text file is still going to be created whether or not there are no match rows and this will be handled in the control flow using the value of the ErrorCount variable.

Step 7:

Now move to back to the control flow and drag and drop a File System Task on to the design area and connect the Foreach loop to it. Select and right click the connection in between the file system task and add the a flow condition as shown.

Setting a control flow condition to delete empty error files

Now select the File System task and edit it as follows to delete the error file that is created within the data flow task.

Setting up the file system task to delete the error file

Because of the condition applied to the input connection of the file system task the error file will now be removed only if its empty. So the net result is that there will be an error file after execution only if there were no match rows.

The control flow design of the completed package is seen below.

Control Flow of the Completed package